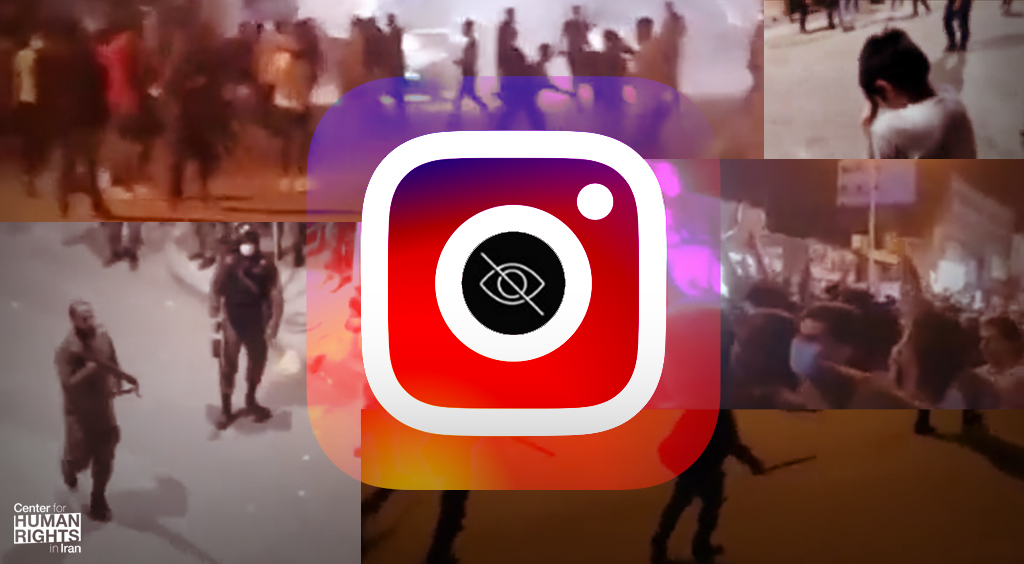

CHRI — In a joint statement released today, human rights organizations ARTICLE 19, Access Now, and the Center for Human Rights in Iran (CHRI) called on Meta to urgently revise its flawed content-moderation process for Persian-language content on the Instagram social media app.

The current process has enabled Iranian state agents to manipulate Meta into removing content that is considered critical of the Iranian government–despite not violating Instagram’s community standards policies:

Instagram suffers from a deficit in trust and transparency when it comes to its content moderation practices for the Persian community. Despite acknowledgments by the company, the caseloads remain high through the communication and network mechanisms that organizations like ours alone maintain, which represent problems from only a small fraction of the community. Many Iranians are abandoning the platform because they distrust the platform’s policies and/or enforcement.

The full statement has been reprinted below.

Help support this urgent action letter by sharing it on social media while tagging Meta’s safety and security staff members including Chief Information Security Officer Guy Rosen (@guyro).

Iran: Meta Needs to Overhaul Persian-Language Content Moderation on Instagram

ARTICLE 19, Access Now, and the Center for Human Rights in Iran (CHRI) have come together to make recommendations to Meta and Meta’s Oversight Board to streamline processes to ensure freedom of expression is protected for users who rely on the social media platform in Iran, especially during protests.

On 7 June 2022, ARTICLE 19 hosted a RightsCon session that included Meta’s Content Policy manager Muhammad Abushaqra, a member of Meta’s Oversight Board Julie Owono, and the BBC Persian’s Rana Rahimpour. The event covered Instagram’s Persian-language content moderation processes and problems. Because Instagram, which is owned by Meta, is now the main platform for communication in Iran, given its status as the last remaining American social media app that’s unblocked in the country, the discussion focused on Meta’s regulation of this platform. According to polls, Instagram hosts 53.1% of all Internet users in Iran on its platform, the second most used app in Iran after WhatsApp.

Instagram suffers from a deficit in trust and transparency when it comes to its content moderation practices for the Persian community. Despite acknowledgments by the company, the caseloads remain high through the communication and network mechanisms that organizations like ours alone maintain, which represent problems from only a small fraction of the community. Many Iranians are abandoning the platform because they distrust the platform’s policies and/or enforcement.

Following the discussion, ARTICLE 19, Access Now, and the Center for Human Rights in Iran (CHRI) are presenting the following recommendations to Meta with a view to helping the company better understand the complexities of Persian-language content, and ensure its content moderation practices uphold and protect the human rights and freedom of expression of everyone using Instagram.

Blocking Hashtags, Blocking Access to Information

Among the key issues discussed with Meta were mistakes and technical errors that prohibited people from accessing certain information, including blocks placed on hashtags. Reference was made in particular to the sanctions placed on the hashtag #IWillLightACandleToo used in support of the campaign for the families of the victims of the Ukraine International Airlines flight PS752 shot down in 2020, resulting in the deaths of all 176 people on board. The hashtag was temporarily blocked from being shared at the height of the campaign.

Speaking specifically about the censorship of #IWillLightACandleToo, the Meta representative explained that while the hashtag and the campaign did not violate any policies, the ability to search for the hashtag was temporarily locked because there was content being shared using the hashtag unrelated to the main message of the campaign, and this content did violate Meta’s policies. The Meta representative suggested that the violating content was part of counter-narratives to refute the campaign and was immediately removed because it violated the company’s Dangerous Organizations and Individuals standards. However, in addition to such individual removals, locks have been placed on the searchability of hashtags that have multiple violations. Meta said when it learned about the issue following a huge raft of complaints, Meta took down the violating content and restored access to the hashtag. The Meta representative also asserted there are systems in place to stop coordinated inauthentic behavior. In the case of the #IWillLightACandleToo campaign, Meta further claimed that such behavior would have been detected, even though, as the BBC’s Rana Rahimpour noted during the event, this problem was only rectified because of substantial media attention to the campaign and because of the strong networks and platforms of the association of families of victims of flight PS752.

Recommendations

ARTICLE 19, Access Now, and CHRI urge Meta to create better rapid response systems to ensure that those without platforms or access to international human rights organizations can escalate the removal of erroneous locks and similar mistakes through more equitable processes. We further urge Meta to actively follow similar campaigns on Instagram to understand their context and prevent these mistakes and eliminate the ability for bad actors, either nation-state affiliated or not, from using Meta’s policies to the detriment of Persian language human rights communities like those behind the PS752 association.

“Death to Khamenei” and Meta’s Consistent Inconsistencies

One of the recent issues raised within the Persian language community has been the takedowns of content on Instagram containing the protest chant “death to Khamenei” or other “death to…” slogans against institutions associated with the dictatorship in Iran. Meta previously issued a temporary exception for “death to Khamenei” during July 2021 following explanations from the Iranian community that clarified the specific cultural context of such chants. The Meta spokesperson has stated, however, that issuing exceptions goes against consistent policies the company maintains against allowing violent calls against leaders, even if they are rhetorical slogans. Meta had, however, granted this kind of exception to allow for hate speech in the context of Russia’s invasion of Ukraine. While Meta has repeatedly declared it must be consistent on these global applications, there has been no further clarification on when and why in some instances – namely the case of Ukraine – such exceptions were granted, but in others not. Meta did clarify after the backlash on the exceptions that the company will not allow exceptions on statements calling for the death of any leaders, particularly calls to death for leaders in Russia or Belarus. However, the rules on their exceptions granting more carve-outs for Ukrainian speech in relation to self-defense against Russian invaders have remained vague.

Our organizations, however, are worried that this lack of nuance in content that is documenting protests causes problematic takedowns of newsworthy protest posts, or in other cases, posts that could help directly or indirectly corroborate human rights abuses. The lack of exception for this kind of speech is in direct contradiction to the six-part threshold test set out in the United Nations’ Rabat Plan of Action on the threshold for freedom of expression in its consideration of incitement to discrimination, hostility or violence, which requires the context and intent of a statement into account, among other considerations

Recommendations

We propose that Meta clarifies in what cases they are making exceptions to allow for violent calls and why beyond the communications on leaders. We also encourage Meta to reinstate the ability for the Oversight Board to deliberate on content decisions in connection to the Russian invasion of Ukraine, especially to ensure oversight over these exceptions processes. We also ask Meta at the very least to preserve violating content. This is because allegedly offensive content from crisis zones is needed as corroborating human rights evidence.

We also urge the Meta Oversight Board to deliberate on protest content removed because of the slogan “death to Khamenei” to ensure consistency when it comes to granting exceptions, as well as to ensure its compliance with international human rights norms. We urge the Meta Oversight Board and Meta to work together to ensure appeals to the Oversight Board can be made beyond the two-week timeframe, as this has proven insufficient time for users who need to navigate the Oversight appeals applications in the midst of conflicts.

Lack of Transparency in Meta’s Moderation Processes

Two issues surrounding transparency were discussed during the RightsCon session: automated moderation processes and human review processes.

Automation

Participants discussed automated review processes whereby media banks or lexicons are used to remove phrases, images, or audio automatically. While Meta confirmed its use of these automated lists, we continue to ask for clarity on the content of these banks and lexicon lists. We note, for instance, that automated removals for non-supportive content of entities listed on the Dangerous Organizations and Individuals (DOI) are rampant. Most relevant in the Iran context is the listing of the Revolutionary Guards on the DOI list. Even after recommendations for the policy to better enforce nuance for contexts that don’t constitute support or praise the entities highlighted by the Oversight Board, and its acceptance by Meta, we continue to see this problem perpetuated. This includes satire, criticism, news and political art expressing criticism of or objection to the Revolutionary Guards, which forms a large chunk of the news or expression content that Persian language and even Arabic language users (Syrian activists, for example) post on Meta-related platforms.

Recommendations

We call for more transparency on the automated processes. We further ask for clarifications on what is contained in media banks used for automated takedowns within the Persian market. Erroneous takedowns continue on the platform, as the processes in place do not allow for nuance on content that references DOI-listed entities. These takedowns violate the commitments that Meta has made in reaction to the two DOI-related Oversight Board recommendations that require nuance in their interpretations.

Human Review Processes

Revelations by the BBC that Iranian officials have tried to bribe Persian-language moderators for Meta at a content moderation contractor Telus International center in Essen, Germany, have also raised concerns about the oversight of human moderation processes. Another BBC report revealed a series of allegations that included a claim that there is not enough oversight of the moderators to neutralize their biases. One anonymous moderator alleged that Meta only assesses the accuracy of 10% of what each moderator does. Meta clarified during this event that audits of reviewers’ decisions are based on a random sample, meaning it would not be possible for a reviewer to evade this system or choose which of their decisions are audited. Meta made public assurances to the BBC it would conduct internal investigations, and during our event, representatives assured us that those investigations have informed them that there are high accuracy rates.

Recommendations

We worry that the random samples Meta explained they used to prove accuracy might not disprove claims that there is still a large gap in oversight of ensuring no bias can impact decisions. Additionally, we are worried claims of “high accuracy” do not reconcile against the documentation and experiences of the Persian language community, which is undergoing constant streams of takedowns or unexplained “mistakes”. There must be clarity over what data is used to determine these accuracy statistics.

ARTICLE19, Access Now, and the Center for Human Rights in Iran (CHRI) urge Meta to make this investigation transparent and clarify with more detail its training and review processes that maintain the integrity of the moderation processes. We urge investment and resources to build trust among these impacted communities.

Shabtabnews In this dark night, I have lost my way – Arise from a corner, oh you the star of guidance.

Shabtabnews In this dark night, I have lost my way – Arise from a corner, oh you the star of guidance.